In his 1944 classic, The Great Transformation, the economic historian Karl Polanyi told the story of modern capitalism as a “double movement” that led to both the expansion of the market and its restriction. During the eighteenth and early nineteenth centuries, old feudal restraints on commerce were abolished, and land, labor, and money came to be treated as commodities. But unrestrained capitalism ravaged the environment, damaged public health, and led to economic panics and depressions, and by the time Polanyi was writing, societies had reintroduced limits on the market.

Shoshana Zuboff, a professor emerita at the Harvard Business School, sees a new version of the first half of Polanyi’s double movement at work today with the rise of “surveillance capitalism,” a new market form pioneered by Facebook and Google. In The Age of Surveillance Capitalism, she argues that capitalism is once again extending the sphere of the market, this time by claiming “human experience as free raw material for hidden commercial practices of extraction, prediction, and sales.” With the rise of “ubiquitous computing” (the spread of computers into all realms of life) and the Internet of Things (the connection of everyday objects to the Internet), the extraction of data has become pervasive. We live in a world increasingly populated with networked devices that capture our communications, movements, behavior, and relationships, even our emotions and states of mind. And, Zuboff warns, surveillance capitalism has thus far escaped the sort of countermovement described by Polanyi.

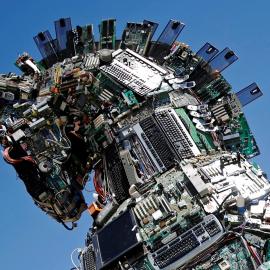

Networked devices capture our communications, movements, behavior, and relationships.

Zuboff’s book is a brilliant, arresting analysis of the digital economy and a plea for a social awakening about the enormity of the changes that technology is imposing on political and social life. Most Americans see the threats posed by technology companies as matters of privacy. But Zuboff shows that surveillance capitalism involves more than the accumulation of personal data on an unprecedented scale. The technology firms and their experts—whom Zuboff labels “the new priesthood”—are creating new forms of power and means of behavioral modification that operate outside individual awareness and public accountability. Checking this priesthood’s power will require a new countermovement—one that restrains surveillance capitalism in the name of personal freedom and democracy.

THE RISE OF THE MACHINES

A reaction against the power of the technology industry is already underway. The U.S. Justice Department and the Federal Trade Commission are conducting antitrust investigations of Amazon, Apple, Facebook, and Google. In July, the FTC levied a $5 billion fine on Facebook for violating promises to consumers that the company made in its own privacy policies (the United States, unlike the European Union, has no general law protecting online privacy). Congress is considering legislation to limit technology companies’ use of data and roll back the broad immunity from liability for user-generated content that it granted them in the Communications Decency Act of 1996. This national debate, still uncertain in its ultimate impact, makes Zuboff’s book all the more timely and relevant.

The rise of surveillance capitalism also has an international dimension. U.S. companies have long dominated the technology industry and the Internet, arousing suspicion and opposition in other countries. Now, chastened by the experience of Russian interference in the 2016 U.S. presidential election, Americans are getting nervous about stores of personal data falling into the hands of hostile foreign powers. In July of this year, there was a viral panic about FaceApp, a mobile application for editing pictures of faces that millions of Americans had downloaded to see projected images of themselves at older ages. Created by a Russian firm, the app was rumored to be used by Russian intelligence to gather facial recognition data, perhaps to create deepfake videos—rumors that the firm has denied. Early last year, a Chinese company’s acquisition of the gay dating app Grindr stirred concern about the potential use of the app’s data to compromise individuals and U.S. national security; the federal Committee on Foreign Investment in the United States has since ordered the Chinese firm to avoid accessing Grindr’s data and divest itself entirely of Grindr by June 2020. It is not hard to imagine how the rivalry between the United States and China could lead not only to a technology divorce but also to two different worlds of everyday surveillance.

According to Zuboff, surveillance capitalism originated with the brilliant discoveries and brazen claims of one American firm. “Google,” she writes, “is to surveillance capitalism what the Ford Motor Company and General Motors were to mass-production-based managerial capitalism.” Incorporated in 1998, Google soon came to dominate Internet search. But initially, it did not focus on advertising and had no clear path to profitability. What it did have was a groundbreaking insight: the collateral data it derived from searches—the numbers and patterns of queries, their phrasing, people’s click patterns, and so on—could be used to improve Google’s search results and add new services for users. This would attract more users, which would in turn further improve its search engine in a recursive cycle of learning and expansion.

Google’s commercial breakthrough came in 2002, when it saw that it could also use the collateral data it collected to profile the users themselves according to their characteristics and interests. Then, instead of matching ads with search queries, the company could match ads with individual users. Targeting ads precisely and efficiently to individuals is the Holy Grail of advertising. Rather than being Google’s customers, Zuboff argues, the users became its raw-material suppliers, from whom the firm derived what she calls “behavioral surplus.” That surplus consists of the data above and beyond what Google needs to improve user services. Together with the company’s formidable capabilities in artificial intelligence, Google’s enormous flows of data enabled it to create what Zuboff sees as the true basis of the surveillance industry—“prediction products,” which anticipate what users will do “now, soon, and later.” Predicting what people will buy is the key to advertising, but behavioral predictions have obvious value for other purposes, as well, such as insurance, hiring decisions, and political campaigns.

Zuboff’s analysis helps make sense of the seemingly unrelated services offered by Google, its diverse ventures and many acquisitions. Gmail, Google Maps, the Android operating system, YouTube, Google Home, even self-driving cars—these and dozens of other services are all ways, Zuboff argues, of expanding the company’s “supply routes” for user data both on- and offline. Asking for permission to obtain those data has not been part of the company’s operating style. For instance, when the company was developing Street View, a feature of its mapping service that displays photographs of different locations, it went ahead and recorded images of streets and homes in different countries without first asking for local permission, fighting off opposition as it arose. In the surveillance business, any undefended area of social life is fair game.

This pattern of expansion reflects an underlying logic of the industry: in the competition for artificial intelligence and surveillance revenues, the advantage goes to the firms that can acquire both vast and varied streams of data. The other companies engaged in surveillance capitalism at the highest level—Amazon, Facebook, Microsoft, and the big telecommunications companies—also face the same expansionary imperatives. Step by step, the industry has expanded both the scope of surveillance (by migrating from the virtual into the real world) and the depth of surveillance (by plumbing the interiors of individuals’ lives and accumulating data on their personalities, moods, and emotions).

The surveillance industry has not faced much resistance because users like its personalized information and free products. Indeed, they like them so much that they readily agree to onerous, one-sided terms of service. When the FaceApp controversy blew up, many people who had used the app were surprised to learn that they had agreed to give the company “a perpetual, irrevocable, nonexclusive, royalty-free, worldwide, fully-paid, transferable sub-licensable license to use, reproduce, modify, adapt, publish, translate, create derivative works from, distribute, publicly perform and display your User Content and any name, username or likeness provided in connection with your User Content in all media formats and channels now known or later developed, without compensation to you.” But this wasn’t some devious Russian formulation. As Wired pointed out, Facebook has just as onerous terms of service.

Even if Congress enacts legislation barring companies from imposing such extreme terms, it is unlikely to resolve the problems Zuboff raises. Most people are probably willing to accept the use of data to personalize their services and display advertising predicted to be of interest to them, and Congress is unlikely to stop that. The same processes of personalization, however, can be used to modify behavior and beliefs. This is the core concern of Zuboff’s book: the creation of a largely covert system of power and domination.

MAKE THEM DANCE

From extracting data and making predictions, the technology firms have gone on to intervening in the real world. After all, what better way to improve predictions than to guide how people act? The industry term for shaping behavior is “actuation.” In pursuit of actuation, Zuboff writes, the technology firms “nudge, tune, herd, manipulate, and modify behavior in specific directions by executing actions as subtle as inserting a specific phrase into your Facebook news feed, timing the appearance of a BUY button on your phone, or shutting down your car engine when an insurance payment is late.”

Evidence of the industry’s capacity to modify behavior on a mass scale comes from two studies conducted by Facebook. During the 2010 U.S. congressional elections, the company’s researchers ran a randomized, controlled experiment on 61 million users. Users were split up into three groups. Two groups were shown information about voting (such as the location of polling places) at the top of their Facebook news feeds; users in one of these groups also received a social message containing up to six pictures of Facebook friends who had already voted. The third group received no special voting information. The intervention had a significant effect on those who received the social message: the researchers estimated that the experiment led to 340,000 additional votes being cast. In a second experiment, Facebook researchers tailored the emotional content of users’ news feeds, in some cases reducing the number of friends’ posts expressing positive emotions and in other cases reducing their negative posts. They found that those who viewed more negative posts in their news feeds went on to make more negative posts themselves, demonstrating, as the title of the published article about the study put it, “massive-scale emotional contagion through social networks.”

The 2016 Brexit and U.S. elections provided real-world examples of covert disinformation delivered via Facebook. Not only had the company previously allowed the political consulting firm Cambridge Analytica to harvest personal data on tens of millions of Facebook users; during the 2016 U.S. election, it also permitted microtargeting of “unpublished page post ads,” generally known as “dark posts,” which were invisible to the public at large. These were delivered to users as part of their news feeds along with regular content, and when users liked, commented on, or shared them, their friends saw the same ads, now personally endorsed. But the dark posts then disappeared and were never publicly archived. Micro-targeting of ads is not inherently illegitimate, but journalists are unable to police deception and political opponents cannot rebut attacks when social media deliver such messages outside the public sphere. The delivery of covert disinformation on a mass basis is fundamentally inimical to democratic debate.

Facebook has since eliminated dark posts and made other changes in response to public criticism, but Zuboff is still right about this central point: “Facebook owns an unprecedented means of behavior modification that operates covertly, at scale, and in the absence of social or legal mechanisms of agreement, contest, and control.” No law, for example, bars Facebook from adjusting its users’ news feeds to favor one political party or another (and in the United States, such a law might well be held unconstitutional). As a 2018 study by The Wall Street Journal showed, YouTube’s recommendation algorithm was feeding viewers videos from ever more extreme fringe groups. That algorithm and others represent an enormous source of power over beliefs and behavior.

Surveillance capitalism, according to Zuboff, is moving society in a fundamentally antidemocratic direction. With the advent of ubiquitous computing, the industry dreams of creating transportation systems and whole cities with built-in mechanisms for controlling behavior. Using sensors, cameras, and location data, Sidewalk Labs, a subsidiary of Google’s parent company, Alphabet, envisions a “for-profit city” with the means of enforcing city regulations and with dynamic online markets for city services. The system would require people to use Sidewalk’s mobile payment system and allow the firm, as its CEO, Dan Doctoroff, explained in a 2016 talk, to “target ads to people in proximity, and then obviously over time track them through things like beacons and location services as well as their browsing activity.” One software developer for an Internet of Things company told Zuboff, “We are learning how to write the music, and then we let the music make them dance.”

Such aspirations imply a radical inequality of power between the people who control the play list and the people who dance to it. In the last third of her book, Zuboff takes her analysis up a level, identifying the theoretical ideas and general model of society that she sees as implicit in surveillance capitalism. The animating idea behind surveillance capitalism, Zuboff says, is that of the psychologist B. F. Skinner, who regarded the belief in human freedom as an illusion standing in the way of a more harmonious, controlled world. Now, in Zuboff’s view, the technology industry is developing the means of behavior modification to carry out Skinner’s program.

The emerging system of domination, Zuboff cautions, is not totalitarian; it has no need for violence and no interest in ideological conformity. Instead, it is what she calls “instrumentarian”—it uses everyday surveillance and actuation to channel people in directions preferred by those in control. As an example, she describes China’s efforts to introduce a social credit system that scores individuals by their behavior, their friends, and other aspects of their lives and then uses this score to determine each individual’s access to services and privileges. The Chinese system fuses instrumentarian power and the state (and it is interested in political conformity), but its emerging American counterpart may fuse instrumentarian power and the market.

NO FUTURE?

The Age of Surveillance Capitalism is a powerful and passionate book, the product of a deep immersion in both technology and business that is also informed by an understanding of history and a commitment to human freedom. Zuboff seems, however, unable to resist the most dire, over-the-top formulations of her argument. She writes, for example, that the industry has gone “from automating information flows about you to automating you.” An instrumentarian system of behavior modification, she says, is not just a possibility but an inevitability, driven by surveillance capitalism’s own internal logic: “Just as industrial capitalism was driven to the continuous intensification of the means of production, so surveillance capitalists are . . . now locked in a cycle of continuous intensification of the means of behavioral modification.”

As a warning, Zuboff’s argument deserves to be heard, but Americans are far from mere puppets in the hands of Silicon Valley. The puzzle here is that Zuboff rejects a rhetoric of “inevitabilism”—“the dictatorship of no alternatives”—but her book gives little basis for thinking we can avoid the new technologies of control, and she has little to say about specific alternatives herself. Prophecy you will find here; policy, not so much. She rightly argues that breaking up the big technology companies would not resolve the problems she raises, although antitrust action may well be justified for other reasons. Some reformers have suggested creating an entirely new regulatory structure to deal with the power of digital platforms and improve “algorithmic accountability”—that is, identifying and remedying the harms from algorithms. But all of that lies outside this book.

Americans are far from mere puppets in the hands of Silicon Valley.

The more power major technology platforms exercise over politics and society, the more opposition they will provoke—not only in the United States but also around the world. The global reach of American surveillance capitalism may be only a temporary phase. Nationalism is on the march today, and the technology industry is in its path: countries that want to chart their own destiny will not continue to allow U.S. companies to control their platforms for communication and politics.

The competition of rival firms and political systems may also complicate any efforts to reform the technology industry in the United States. Would it be a good thing, for example, to heavily regulate major U.S. technology firms if their Chinese rivals gained as a result? The U.S. companies at least profess liberal democratic values. The trick is passing laws to hold them to these values. If Zuboff’s book helps awaken a countermovement to achieve that result, we may yet be able to avoid the dark future she sees being born today.

You are reading a free article.

Subscribe to Foreign Affairs to get unlimited access.

- Paywall-free reading of new articles and a century of archives

- Unlock access to iOS/Android apps to save editions for offline reading

- Six issues a year in print, online, and audio editions